Tips for identifying AI-generated content

- This article is more than two years old.

- Published on June 21, 2023 at 14:14

- Updated on July 3, 2023 at 14:32

- 9 min read

- By Juliette MANSOUR, Erin FLANAGAN, Sophie NICHOLSON, AFP France

Some ultra-realistic images of news events have already been mistaken for real ones and shared on social media platforms.

How can you tell a genuine image from a computer-generated one? Visual inconsistencies and a picture's context can help - but there is no foolproof method of identifying an AI-generated image, specialists told AFP.

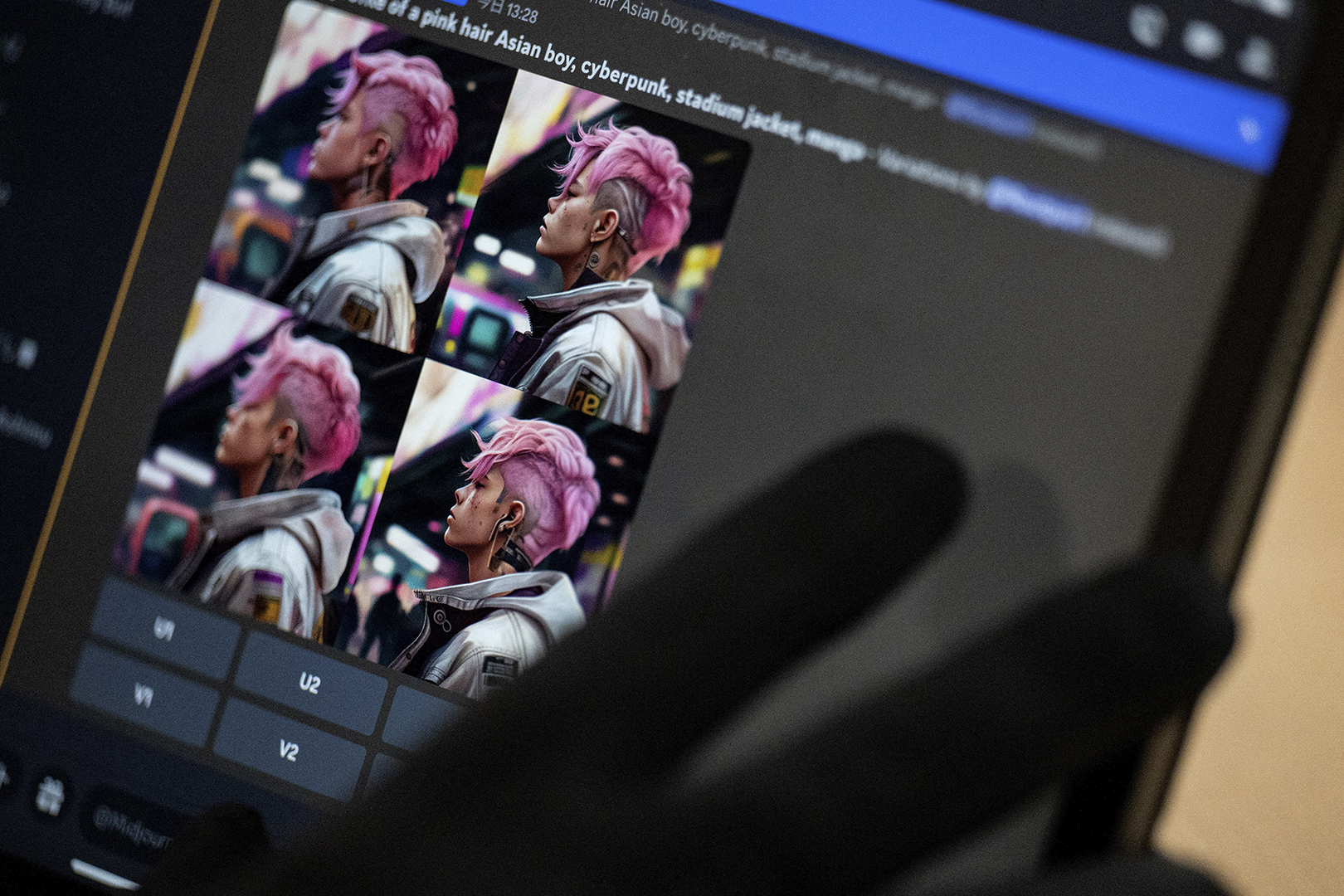

Recently developed AI tools such as Midjourney, DALL-E, Craiyon, Leonardo.Ai or Stable Diffusion can generate infinite images by drawing on massive databases.

Users can type in a series of keywords as "their prompt", and the tool will generate an image based on their request.

Many people use these tools for humorous or artistic purposes, but others rely on them to fabricate images of politically-charged news.

For example, an AI-generated image circulated on social media showing a fiery explosion outside the Pentagon on May 22, 2023, causing confusion and appearing to cause a brief dip on Wall Street.

Other fabricated pictures claimed to show a non-existent "Satanic" clothing line for children at US retail giant Target.

Although most creators clearly state these images are fabricated, others circulate without context or are presented as authentic.

Developers have launched tools such as Hugging Face to try to detect these montages. But the results are mixed and can sometimes be misleading, according to tests by AFP.

"When AI is generating pictures (from scratch), there is, in general, not a single original image from where parts are taken," David Fischinger, an AI specialist and engineer at the Austrian Institute of Technology, told AFP. "There are thousands/ millions of photos that were used to learn billions of parameters."

Vincent Terrasi, co-founder of Draft & Goal, a startup that launched an AI detector for universities, added: "The AI mixes these images from its database, deconstructs them and then reconstructs a photo pixel by pixel, which means that in the final rendering, we no longer notice the difference between the original images."

That is why manipulation detection software works poorly, if at all, in identifying AI-generated images. Metadata, which gives context about how and when a picture was taken, is not useful for AI-generated images shared online since social media usually erases this information.

"Unfortunately, you cannot rely on metadata since on social networks they are completely removed," AI expert Annalisa Verdoliva, professor at the Frederick II University of Naples, told AFP.

Go back to the image source

Experts say one important clue is finding the first time the picture was posted online.

In some cases, the creator may have said it was AI-generated and indicated the tool used.

A reverse image search can help by seeing if the picture has been indexed in search engines and finding old posts with the same photo.

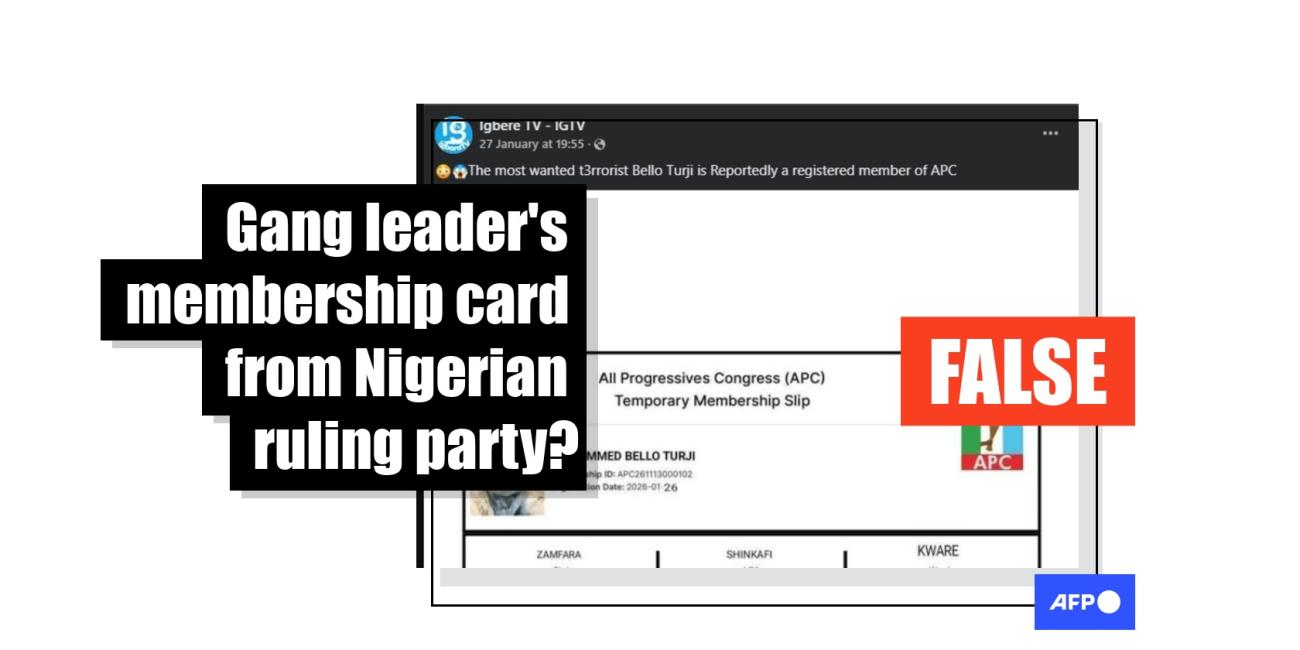

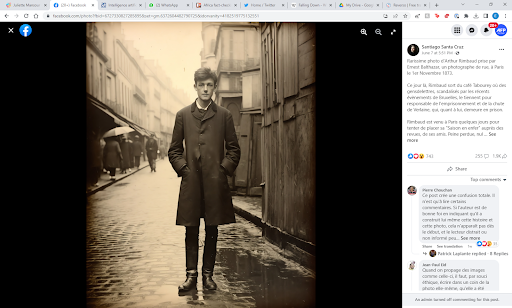

This method reveals the source of images allegedly showing an old photo of the French poet, Arthur Rimbaud, standing on a road in Paris in 1873. The photo was purportedly taken by a street photographer called Ernest Balthazar.

A Google reverse image search for one of these images leads to the oldest version of the picture published on a social media site where the artist clearly states that it was created with artificial intelligence.

"This is how this sad day…could have played out if Rimbaud had indeed crossed paths with Ernest Balthazar. So I helped the story along, played with destiny and constructed a dream by creating an image probably not too far from reality", the artist wrote alongside the image.

He also responded to a user in the comments saying, "It was republished with the words ‘image created by AI', so it’s all good".

His social media page includes several more AI-generated images and even a video of the young poet.

Screenshot of the artist’s post, taken June 19, 2023

If you cannot find the original photo, a reverse image search can lead to a better-quality version of the picture if it has been cropped or modified while being shared. A sharper photo will be easier to analyse for errors that might reveal the image is computer-generated.

A reverse image search will also find similar pictures, which can be valuable for comparing potential AI-generated photos with those from reliable sources.

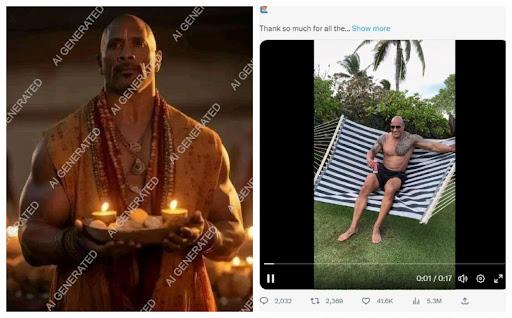

One viral picture allegedly showing Hollywood star Dwayne Johnson dressed as a Hindu holy man does not show the actor’s distinctive chest and arm tattoos.

Screenshot comparison between the AI-generated image being falsely shared online (left) and a video from Johnson's official Twitter account (right)

This technique can help identify reliable pictures of major events from trustworthy media.

Photo captions and online comments can also be useful in recognising a particular style of AI-generated content. DALL-E, for example, is known for its ultrarealistic designs and Midjourney for its scenes showing celebrities.

Some tools, like Midjourney, can leave a trace of AI-generated images on different conversation channels.

Visual clues

Even without knowing a photo's source, you can analyse the image using visual clues.

Look for a watermark

Sometimes clues are hidden in the photo, such as a watermark used by some AI creation tools.

DALL-E, for example, automatically generates a multi-coloured bar on the bottom right of all its images. Crayion places a small red pencil in the same place.

Image generated by AFP on March 22, 2023 using DALL-E and the phrase: "A lolipop being held by a kid on a beach"

Image generated by Craiyon.com on March 22, 2023 using the phrase: "Pen on a table"

But not all AI-generated images have watermarks -- and these can be removed, cropped or hidden.

Tips from the art world

Tina Nikoukhah, a doctoral student studying image processing at ENS Paris-Saclay University, told AFP: "If in doubt, look at the grain of the image, which will be very different for an AI-generated photo from that of a real photo."

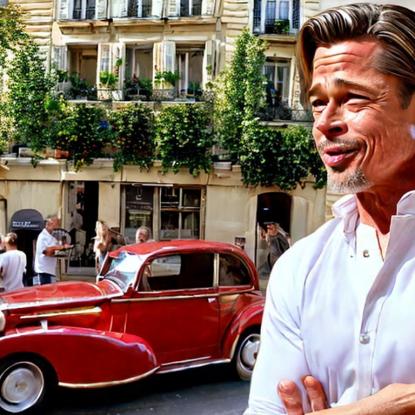

Using free versions of AI tools, AFP generated images that had a style similar to paintings of the hyperrealist movement, such as the left-hand example below showing an image of Brad Pitt in Paris.

Another creation below on the right was made with similar keywords on DALL-E. The image is not as obviously AI-generated.

AI-generated image using Stable Diffusion on March 22, 2023 using the phrase: "Brad Pitt, street of Paris, early and sunny morning, holding a croissant, near a bakery and old French car, wearing a white shirt, smiling, realistic, 4K"

AI-generated image created on March 22, 2023 using DALL-E and the phrase: "Brad Pitt in Paris, photo, shops in the background, 4K"

Visual inconsistencies

Despite the meteoric progress in Generative AI, errors still show up in AI-generated content. These defects are the best way to recognise a fabricated image, specialists told AFP.

"Some characteristics, often the same ones, pose a problem for AI. It is these inconsistencies and artefacts that must be scrutinised, as in a game of spot the difference", said Terrasi of Draft & Goal.

However, Verdoliva of Frederick II University of Naples warned: "Generation methods keep improving over time and show fewer and fewer synthesis artefacts, so I would not rely on visual clues in the long term".

For example, in March 2023, realistic hands are still difficult to generate. AFP's AI-generated photo of Pitt shows the actor with a disproportionately large finger.

An AFP journalist pointed out in February 2023 that a police officer had six fingers in a series of pictures allegedly taken during a demonstration against the French pension system reform on February 7, 2023.

The hand strikes again🖖: these photos allegedly shot at a French protest rally yesterday look almost real - if it weren't for the officer's six-fingered glove #disinformation #AI pic.twitter.com/qzi6DxMdOx

— Nina Lamparski (@ninaism) February 8, 2023

"Currently, AI images are also having a very difficult time generating reflections", Terrasi said. "A good way to spot an AI is to look for shadows, mirrors, and water, but also to zoom in on the eyes and analyse the pupils since there is normally a reflection when you take a photo. We can also often notice that the eyes are not the same size, sometimes with different colours."

Generators also often create asymmetries. Faces can be disproportionate, or ears have different sizes.

Teeth and hair are difficult to imitate and can reveal, in their outlines or texture, that an image is not real.

And some elements can be poorly integrated, such as sunglasses that blend into a face.

Experts also say mixing together several images may create lighting problems in an AI-generated image.

Scenes from two of the images shared in the misleading posts showing inconsistent visual features

Check the background

A good way to spot these anomalies is to peer into the photo background. While it may seem normal at first glance, an AI-generated photo often reveals errors, such as in these photos allegedly showing children participating in a satanic ceremony.

For example, some photos show more than five fingers on the children's hands, while others show inconsistent limbs and incomplete facial features.

"The farther away an element is, the more an object will be blurred, distorted, and have incorrect perspectives", Terrasi said.

In the fake photo of an explosion at the Pentagon, the columns on the building are mismatched sizes, and a lamp post in the foreground appears disjointed. The sidewalk also seems to blend in with the street, grass and fence in some places.

Screenshot from Twitter taken on May 22, 2023, with elements added by AFP using the InVID WeVerify Plugin's magnifier tool

Look at the text

AI-generated text in images – for example, on storefronts or signs – often lacks sense and can even be totally illegible. For example, when AFP generated images of Paris, the store names showed a random string of letters that did not make a word.

Use common sense

Some elements may not be distorted, but they can still betray an error of logic. "It's good to rely on common sense" when you doubt an image, Terrasi said.

The photo below, generated by AFP on DALL-E and intended to show Paris, features a blue no-entry sign, which does not exist in France.

Photo generated with DALL-E on March 22, 2023, by AFP using the phrase: "Brad Pitt, street of Paris, early and sunny morning, holding a croissant, near a bakery and old French car, wearing a white shirt, smiling, realistic, 4K"

This clue, combined with the chopped fingertips of the central character, a plastic-looking croissant and a difference in lighting on some windows, suggests the photo is AI-generated.

The watermark at the bottom right of the image removes any doubt and signals that the shot is from DALL-E.

Finally, if an image claims to show an event but its credibility is in doubt, turn to reliable sources to look for possible inconsistencies.

For now, AI-generated videos - without further manipulation using professional video software - are not as convincing as photos and contain many imperfections that are easily detectable. But as generative AI software progresses and offers better tools, the quality of these videos will also improve.

If you come across a video you think might have been created using AI, you can apply the same techniques.

AI-generated audio

Meanwhile, AI voice scams have raised concerns about the use of voice cloning tools in cybercrimes and in targeting well-known figures.

"AI voice cloning, now almost indistinguishable from human speech, allows threat actors like scammers to extract information and funds from victims more effectively", Wasim Khaled, chief executive of Blackbird.AI, told AFP.

"With a small audio sample, an AI voice clone can be used to leave voicemails and voice texts. It can even be used as a live voice changer on phone calls", Khaled added.

Artificial Intelligence tools have also been used to recreate the vocals of famous artists, including a Beatles song made using a demo with John Lennon’s voice, set to be released later this year.

But they have also been used in many copycat versions.

"Heart on a Sleeve", a track featuring AI-generated copycats of Drake and The Weeknd, racked up millions of hits on social media platforms.

"We're fast approaching the point where you can't trust the things that you see on the internet", Gal Tal-Hochberg, group chief technology officer at the venture capital firm Team8, told AFP.

"We are going to need new technology to know if the person you think you're talking to is actually the person you're talking to", he said.

Copyright © AFP 2017-2026. Any commercial use of this content requires a subscription. Click here to find out more.

Is there content that you would like AFP to fact-check? Get in touch.

Contact us