AI disinformation fears grow as summit debates new technology

- This article is more than one year old.

- Published on February 21, 2025 at 22:02

- 5 min read

- By Claire-Line NASS, AFP France

- Translation and adaptation Satya Amin, AFP USA

As the summit opened, French President Emmanuel Macron cited a "need for rules" to govern artificial intelligence, in an apparent rebuff to US Vice President JD Vance, who had criticized excessive regulation.

The debate came amid growing concerns on the rise of AI, from deepfakes aimed at influencing elections to chatbots that relay false answers.

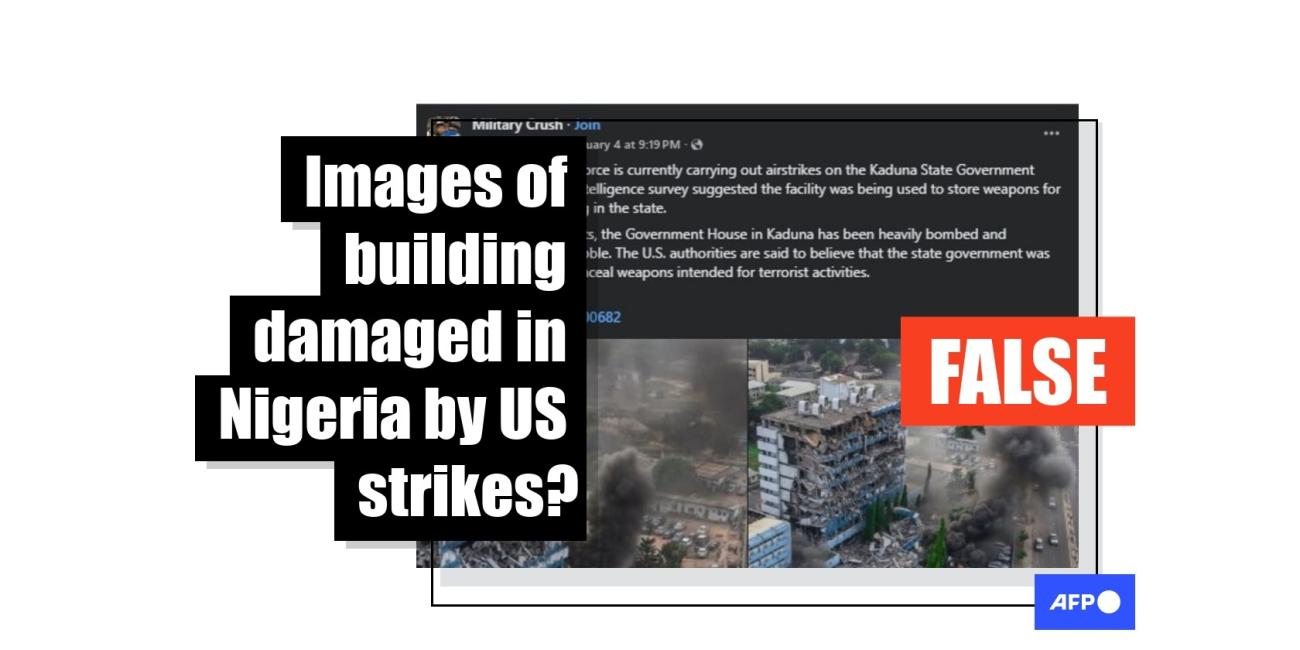

Analysts say AI has become a widely used tool in fueling disinformation.

In early 2024, an alleged recording garnered significant attention: the leader of Slovakia's pro-European political party could be heard admitting the elections were going to be rigged. But, as confirmed by an AFP investigation, the audio was a deepfake, content rigged using AI to emulate real or non-existent people.

During the 2024 US election campaign, then-president Joe Biden was seemingly heard calling New Hampshire voters and advising them not to vote in the primary election. But it was also a deepfake (archived here).

All over the world, politicians are facing this type of manipulated content which can easily attract tens of thousands of interactions on social media.

This was the case for Macron, whose voice was edited to make it seem as if he was announcing his resignation in a widely shared video with a doctored soundtrack.

From US President Donald Trump to Russian President Vladimir Putin and Canadian Prime Minister Justin Trudeau to former prime minister of New Zealand Jacinda Ardern, AFP's global fact-checking team has verified numerous posts generated with AI.

Pornographic Deepfakes

The Sunlight Project, a research group on misinformation, warned recently that all women are potentially vulnerable to pornographic deepfakes (archived here).

This is particularly true for female politicians and AFP has identified elected officials in the United Kingdom, Italy, the United States and Pakistan who have been the targets of AI-generated pornographic images. Researchers fear this worrying trend may discourage women's public participation (archived here).

Sexually explicit deepfakes also regularly target celebrities. This was the case for American superstar Taylor Swift in January 2024. One deepfake of the pop sensation was viewed 47 million times before being taken down (archived here).

Disinformation operations

AI is also responsible for large-scale digital interference operations, or attempts to influence public opinion, usually through social media.

Pro-Russian disinformation campaigns known as Doppelgänger, Matriochka and CopyCop are some of the most high-profile examples. Their creators have used fake profiles, or bots, to publish AI-generated content, with the goal of diminishing Western support for Ukraine.

"What's new is the scale and ease with which someone with very few financial resources and time can disseminate false content that, on the other hand, appears increasingly credible and is increasingly difficult to detect," said Chine Labbé, editor-in-chief of Newsguard, an organization which analyzes the reliability of online sites and content (archived here).

The rise of groups using AI content to shape public opinion is particularly worrying in countries experiencing conflict.

An AFP investigation revealed that supporters of warring factions in Ethiopia take advantage of ethnic divisions within the country, as well as poor media literacy skills to spread AI-generated disinformation which runs the risk of renewing violence.

The US Federal Bureau of Investigation warned in a May 2024 report that "AI provides augmented and enhanced capabilities to schemes that attackers already use and increases cyber-attack speed, scale, and automation" (archived here).

According to FBI Special Agent in Charge Robert Tripp: "As technology continues to evolve, so do cybercriminals' tactics."

'Web Pollution'

No sector is immune to the risk posed by AI: fake music videos are often spread online, as are fabricated photos of historical events, generated in just a few clicks (archived here).

As early as 2022, an alleged song by American rapper Eminem in which he seemingly criticized the former president of Mexico was mistaken for a real song by thousands of internet users. In reality, the audio was generated using AI software.

In 2024, AFP investigated what appeared to be an image of the rarely photographed French painter Arthur Rimbaud from 1873. However, the photo was digitally generated. The image's author told AFP that the photo is an imagined moment, meant to resemble a scene that could potentially have occurred.

Some individuals also use AI to generate online engagement. On Facebook, accounts will increasingly publish evocative AI images, not necessarily to circulate false information, but rather to capture users' attention for commercial purposes or even to identify gullible individuals for future scams.

At the end of December, when the story of a man who set fire to a woman in the New York subway was making headlines, an alleged photo of the victim spread rapidly on social media. In reality, it was AI-generated with the aim of directing social media users to a cryptocurrency seeking to capitalize on the tragedy.

Another trend involves deepfakes of well-known doctors. Retired American neurosurgeon Ben Carson has repeatedly featured in deepfakes, in which he seemingly promotes unfounded medical cures for diseases ranging from high blood pressure to dementia.

"Beyond the risk of misinformation, there's also the risk of web pollution: you never know whether you're dealing with content that has been verified and edited by a thorough human being, or whether it's generated by AI with no concern for truthfulness and accuracy," Newsguard's Labbé said.

After major news events, a flood of AI images is generated. Numerous dubious posts capitalized on the massive fires in Los Angeles in early 2025 to spread fake photos including those of the "Hollywood" sign in flames and an Oscar amid ashes that were shared around the world.

Unreliable answers

Popular chatbots, such as ChatGPT, an American AI company, can also propagate false claims. In fact, "they tend to quote AI-generated sources first, so it's a case of the snake biting its own tail," Labbé said.

Research published in early February by Newsguard also shows that these chatbots typically generate more disinformation in languages such as Russian and Chinese, as they are more susceptible to state propaganda discourse (archived link here).

The growing popularity of the Chinese tool DeepSeek has raised national security concerns. Its tendency to repeat official Chinese stances in its responses to user questions (archived here) further shows the need to impose safety frameworks on AI.

Labbé says one solution would be to teach chatbots "to recognize reliable sources from propaganda sources."

AFP has also identified false claims spread by answers generated from unreliable sources by Amazon's Alexa and misinterpretations of Google's AI search summary.

Copyright © AFP 2017-2026. Any commercial use of this content requires a subscription. Click here to find out more.

Is there content that you would like AFP to fact-check? Get in touch.

Contact us