ChatGPT spawns false claim on Canadian politician's wife

- This article is more than two years old.

- Published on March 31, 2023 at 22:02

- Updated on April 29, 2024 at 17:09

- 4 min read

- By Gwen Roley, AFP Canada

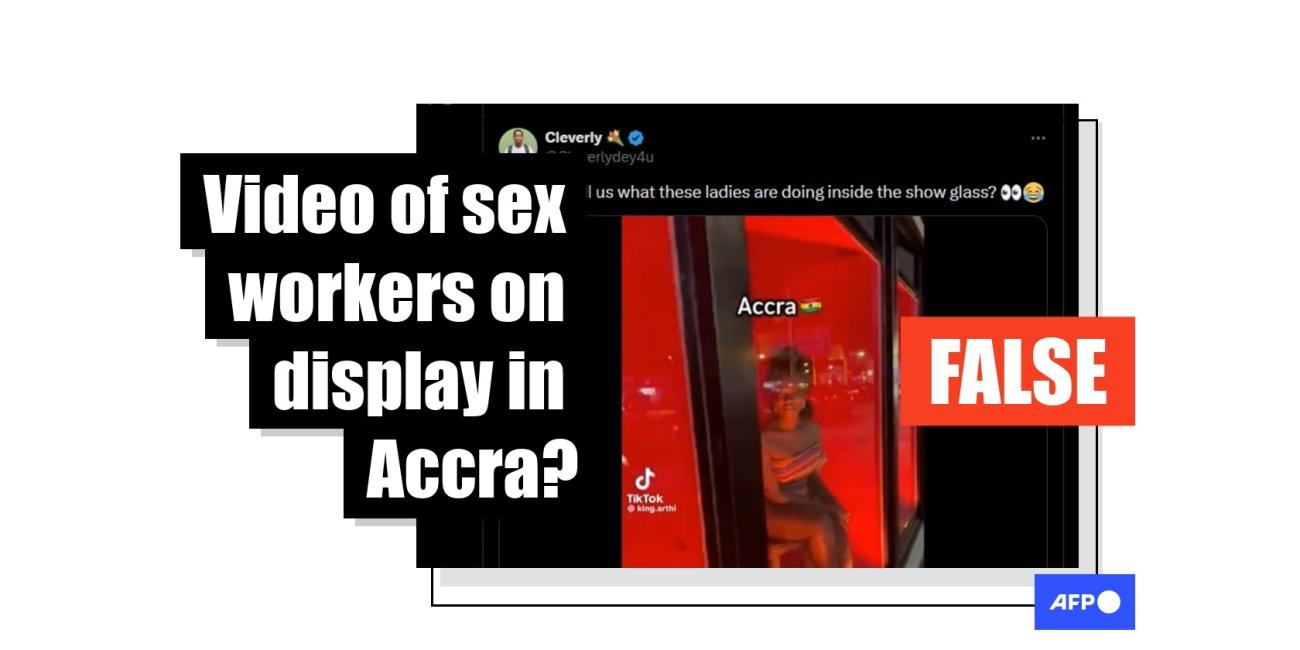

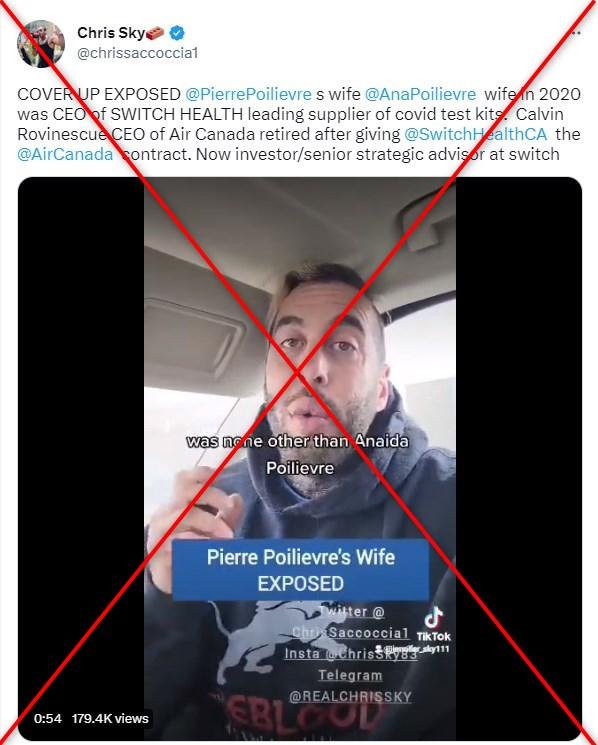

"COVER UP EXPOSED @PierrePoilievres wife @AnaPoilievre wife in 2020 was CEO of SWITCH HEALTH leading supplier of covid test kits," says the text of a March 5, 2023 tweet, accompanied by a video. The video features Chris Saccoccia, better known as Chris Sky, an activist who gained renown for defying Canada's pandemic-era travel restrictions and who AFP previously fact-checked.

In the video, which also spread to Facebook, Saccoccia says he asked the AI-powered program ChatGPT about contracts obtained during the pandemic by Switch Health.

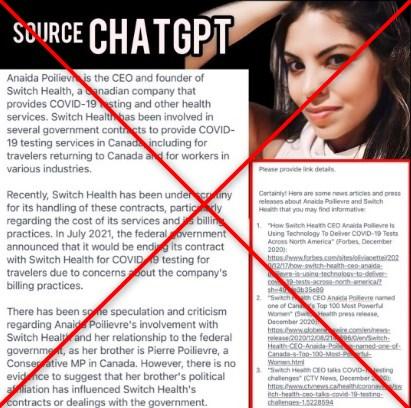

He claimed the AI chatbot provided him with links to articles describing how Anaida Poilievre was CEO of the medical testing firm. Similar claims appeared on TikTok.

In replies and later tweets, Saccoccia provided AI-generated links and headlines to articles -- purportedly from CTV and CBC, among others -- but searching for the links and headlines provided leads to error pages.

Saccoccia claimed this information was "scrubbed" from the internet to cover up a conspiracy between the Canadian government, the travel industry and private healthcare to make money off travelers during the pandemic.

In 2021, Switch Health did partner with Air Canada to provide Covid-19 rapid tests to travelers, for a fee, to meet Canada's entry requirements at the time.

The company started in 2017 with Dilian Stoyanov as CEO and later added co-founder Marc Thomson as a co-CEO.

The Conservative leader's spokesman said his wife, who is a co-founder of a women's lifestyle website, never worked at the medical diagnostics company.

"These claims are clearly and unequivocally false," Sebastian Skamski said in a March 22 email. "Mrs. Poilievre has had no affiliation whatsoever with Switch Health."

AFP also reached out to Switch Health for comment, but a response was not forthcoming.

Chatbots' sourcing problem

ChatGPT was launched by OpenAI in November 2022 and was followed by chatbot programs from Google and Microsoft in early 2023.

AI chatbots work by using a prompt and scraping wide swaths of data to provide a response. This can lead to accurate responses, but the data the AI can scrape is still limited in scope and accuracy, therefore, allowing for answers with no basis in fact.

"The sources of the data are in places where disinformation goes around, where stereotypes go around, and that then can influence the output," said Gideon Blocq, the CEO of VineSight, a company which uses AI to detect potential online misinformation.

Blocq said this blind spot in the model is something OpenAI and other companies are working to remedy so falsehoods are not injected into chatbots' responses.

NewsGuard, a company that tracks online misinformation, conducted experiments on ChatGPT by giving it leading prompts about false narratives. Eighty percent of the time, the chatbot responded supporting the false information.

"It would not only relay factually incorrect information, but it would just pull studies out of thin air to back up its claim," said Jack Brewster, NewsGuard's enterprise editor.

NewsGuard conducted its same experiment by providing leading prompts to the newer GPT-4 and it responded with false information 100 percent of the time.

"This technology can lower the barrier to entry for bad actors to spread misinformation at a large scale," Brewster said. "When we get it to produce well-written articles, quotes, tweets, any kind of content containing misinformation it can be used effectively as a weapon."

Among the alleged headlines about Poilievre were stories said to be from the BBC, CTV News, The Globe and Mail, CBC and Forbes, who all confirmed to AFP they had never published such articles. The other articles from Global Newswire, Global News, Yahoo and Sportsnet do not appear online or in internet archives.

OpenAI says on its help page that ChatGPT can sometimes provide inaccurate information, due to its limited database. When asked for more information on the limitations of its program, the company declined to comment.

Both Brewster and Blocq said that while the technology needs to be updated, users also need to be taught to understand how the chatbot models work and to spot inaccurate AI-generated content when it appears.

AI-generated images are also in the spotlight for causing social media users to believe false claims. Blocq said this trend can mainly be countered by looking critically at sources of information.

"Looking at the content itself will just be an endless game of cat and mouse and you'll just spend a lot of time likely not getting anywhere," Blocq said.

More of AFP's reporting on AI-generated misinformation can be found here.

Copyright © AFP 2017-2026. Any commercial use of this content requires a subscription. Click here to find out more.

Is there content that you would like AFP to fact-check? Get in touch.

Contact us